Session 2009/2010

Fifth Report

Public Accounts Committee

Report on

Public Service Agreements – Measuring Performance

TOGETHER WITH THE MINUTES OF PROCEEDINGS OF THE COMMITTEE

RELATING TO THE REPORT AND THE MINUTES OF EVIDENCE

Ordered by The Public Accounts Committee to be printed 5 November 2009.

Report: NIA 22/09/10R Public Accounts Committee

This document is available in a range of alternative formats.

For more information please contact the

Northern Ireland Assembly, Printed Paper Office,

Parliament Buildings, Stormont, Belfast, BT4 3XX

Tel: 028 9052 1078

Membership and Powers

The Public Accounts Committee is a Standing Committee established in accordance with Standing Orders under Section 60(3) of the Northern Ireland Act 1998. It is the statutory function of the Public Accounts Committee to consider the accounts and reports of the Comptroller and Auditor General laid before the Assembly.

The Public Accounts Committee is appointed under Assembly Standing Order No. 56 of the Standing Orders for the Northern Ireland Assembly. It has the power to send for persons, papers and records and to report from time to time. Neither the Chairperson nor Deputy Chairperson of the Committee shall be a member of the same political party as the Minister of Finance and Personnel or of any junior minister appointed to the Department of Finance and Personnel.

The Committee has 11 members including a Chairperson and Deputy Chairperson and a quorum of 5.

The membership of the Committee since 9 May 2007 has been as follows:

Mr Paul Maskey*** (Chairperson)

Mr Roy Beggs (Deputy Chairperson)

| Mr Patsy McGlone ** | Ms Dawn Purvis |

| Mr Jonathan Craig | Mr David Hilditch ******* |

| Mr John Dallat | Mr Jim Shannon ***** |

| Mr Trevor Lunn | Mr Mitchel McLaughlin |

| Rt Hon Jeffrey Donaldson MP MLA ******** |

Mr Mickey Brady replaced Mr Willie Clarke on 1 October 2007*

Mr Ian McCrea replaced Mr Mickey Brady on 21 January 2008*

Mr Jim Wells replaced Mr Ian McCrea on 26 May 2008*

Mr Mr Thomas Burns replaced Patsy McGlone on 4 March 2008**

Mr Paul Maskey replaced Mr John O’Dowd on 20 May 2008***

Mr George Robinson replaced Mr Simon Hamilton on 15 September 2008****

Mr Jim Shannon replaced Mr David Hilditch on 15 September 2008*****

Mr Patsy McGlone replaced Thomas Burns on 29 June 2009******

Mr David Hilditch replaced Mr George Robinson on 18 September 2009*******

Rt Hon Jeffrey Donaldson replaced Mr Jim Wells on 18 September 2009********

Table of Contents

List of abbreviations used in the Report

Report

Executive Summary

Summary of Recommendations

Introduction

The Need for Robust Governance Arrangements

Vigorous Reporting Procedures

Refining Procedures

Appendix 1:

Minutes of Proceedings

Appendix 2:

Minutes of Evidence

Appendix 3:

Correspondence

Appendix 4:

List of Witnesses

List of Abbreviations used in the Report

PSA Public Service Agreements

OFMDFM Office of the First Minister and deputy First Minister

DETI Department of Enterprise, Trade and Investment

PEDU Public Expenditure and Delivery Unit

DARD Department of Agriculture and Rural Development

C&AG Comptroller and Auditor General

DFP Department of Finance and Personnel

GVA Gross Value Added

Executive Summary

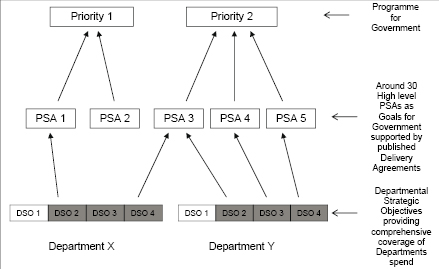

1. Since 1998, Northern Ireland departments have been required to publish Public Service Agreements (PSAs) covering each three-year government spending cycle. These specify the targets to be used to measure performance against key departmental and cross-cutting objectives.

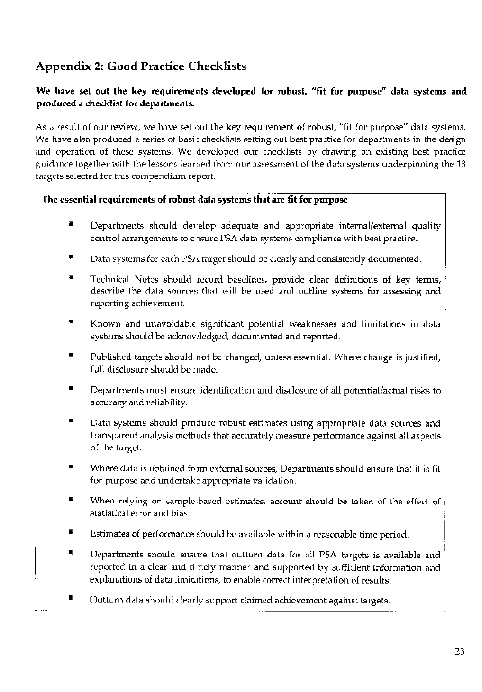

2. Adopting high standards in performance reporting can improve the accountability and transparency of public service delivery, help departments to allocate resources effectively and contribute to robust, evidence-based policy decisions. The Committee has real concerns, however, about the reliability and accuracy of the underlying PSA data systems. This makes it difficult to conclude that reported performance has actually been achieved.

3. OFMDFM has a central co-ordination and oversight role in relation to PSAs but its exercise of these functions was not sufficiently rigorous in the past. Despite subsequent improvements in oversight arrangements, it is clear that the new system has not sufficiently addressed the specific data system limitations identified by the C&AG’s report. Ten years after the launch of PSAs, much still remains to be done before any examination of targets and data systems will produce a clean bill of health.

4. OFMDFM must do more to hold individual departments to account for implementing improvements to weak data systems. At the moment, it is unlikely it has the capacity to do so. Given this gap, the Committee believes that there is a clear need for the C&AG to provide independent oversight of data systems in order to drive forward quality improvements in this area.

5. If the quality of measurement systems is poor, the reported data will also be poor. Senior management must therefore take ownership of this issue. In the past, departmental senior managers have not been sufficiently involved in overseeing data quality and have failed to use technical specialists, such as statisticians and economists, to best effect. Their involvement can help improve the quality of PSA data systems and should be encouraged.

6. There is evidence that reported performance has not always been substantiated by the underlying data. Although there is now a greater degree of challenge exerted by a central team from the Office of the First Minister and deputy First Minister (OFMDFM) and the Department of Finance and Personnel (DFP), the new Delivery Report still represents a subjective assessment of performance. The quality of reporting falls short of best practice and the DFP Departmental Committee has already identified that self-assessed claims of performance achievement were in fact not sustainable.

7. It is inherently difficult to set targets in the public sector as there is rarely a single measure which adequately captures overall departmental performance. However the Committee was disappointed at the lack of clarity in some targets, their failure to get to the heart of business objectives and evidence of changes to targets during the 3 year public spending cycle. There is also evidence of a lack of consistency across departments in terms of whether to select output or outcome measures. A more sophisticated framework of targets could usefully be developed for the next Programme for Government.

Summary of recommendations

1. The lack of focus on data quality and on the robustness of measurement systems remains a concern. In the Committee’s view, this is an area in which OPFMDFM must continue to seek improvement. However to provide independent assurance to the Assembly, the Committee recommends that OFMDFM and the C&AG jointly develop a work programme of PSA data system validation to assess the reliability and accuracy of the underlying systems and the reported data.

2. All departments have access to a range of specialist staff. The Committee recommends that all departments use in-house resources such as statisticians and economists to improve their oversight of the quality of PSA data systems. This should ensure separation of duties between those responsible for service delivery and those responsible for monitoring and reporting performance.

3. Accounting Officers must take ownership of PSA data systems. The Committee recommends that departments’ Statements of Internal Control include a specific assurance that risks to data quality have been assessed and that appropriate controls have been put in place to mitigate these identified risks.

4. It is of concern that the quality of reporting still falls short of best practice. The Committee recommends that, for each target, the Delivery Report should include the baseline position, interim outturn figures and the latest available data to ensure an objective and quantitative assessment of performance.

5. It is alarming that the performance assessments undertaken by individual departments contradict those of the central Delivery Report and that they use different assessment scales. The Committee recommends that all assessments are based on objective information and that departments align their self-assessment scales to the four-point system used in the central Delivery Report.

6. The summary nature of the Delivery Report limits transparency and scrutiny in the PSA process. The Committee recommends that progress on all targets contained in Delivery Agreements is reported every six months, made available on departmental websites and submitted to the relevant departmental Committees.

7. In the past, too many targets have been overly complicated and difficult to understand and monitor. Departments must ensure that each target is precisely stated and easily understood. Given past limitations in this respect, the Committee expects that all future PSA targets will be specific, measurable, attainable, realistic and timebound, and that, collectively, they cover the main aspects of departments’ key services.

8. Setting targets to measure public sector performance is not an exact science. However, there is a significant divergence in the approaches used by different departments. The Committee recommends that, for the next Programme for Government, OFMDFM takes the lead in developing a more sophisticated framework which introduces greater consistency in the use of output and outcome targets across all departments.

9. Changing targets during the life of the PSA risks undermining user confidence and accountability. The Committee recommends that such changes to targets are avoided but, if absolutely necessary, they must be explicitly disclosed and accompanied by a clear justification of the need for change.

Introduction

1. The Public Accounts Committee met on 8 October 2009 to consider the Comptroller and Auditor General’s report ‘Public Service Agreements – Measuring Performance’. The main witnesses were:

- Mr John McMillen, Accounting Officer, Office of the First Minister and deputy First Minister (OFMDFM);

- Mr Damien Prince, Head of Economic Policy Unit, Office of the First Minister and deputy First Minister (OFMDFM);

- Mr Gerry Lavery, Senior Finance Director, Department of Agriculture and Rural Development (DARD);

- Mr David Sterling, Accounting Officer, Department of Enterprise, Trade and Investment (DETI);

- Mr Richard Pengelly, Public Spending Director, Department of Finance and Personnel (DFP); and

- Mr Kieran Donnelly, Comptroller and Auditor General (C&AG): and

- Ms Fiona Hamill, Deputy Treasury Officer of Accounts.

2. Since 1998, Northern Ireland departments have been required to publish Public Sector Agreements (PSAs) covering each three-year government spending cycle. These specify the targets to be used to measure performance against key departmental and cross-cutting objectives. Adopting high standards in performance reporting improves the accountability and transparency of public service delivery and can help departments to allocate resources effectively and make robust, evidence-based policy decisions.

3. In practice, reporting of performance relies on data generated from the underlying data systems. The C&AG’s report considered the adequacy of a selection of the systems used by departments to measure performance. This report noted that senior management were not sufficiently involved in PSA data systems, some of the measurement systems were not fit for purpose, and performance was not being reported in a sufficiently clear, timely and comprehensive manner.

4. In taking evidence on the C&AG’s report, the Committee focused on:

- the need for robust governance arrangements;

- the need for PSA reports to be comprehensive, accurate and reliable; and

- the need for departments to give improve target-setting procedures.

The Need for Robust

Governance Arrangements

The central oversight role of OFMDFM

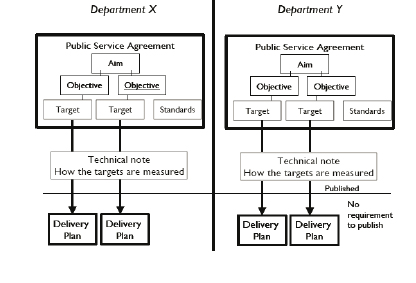

5. OFMDFM has a central co-ordination role in relation to PSAs. This role involves ensuring that departments adopt appropriate PSA targets, co-ordinating annual progress reports and promoting best practice. It is clear that, at the time of the C&AG’s report, OFMDFM did not fulfil its central oversight role to an adequate standard. It did not provide guidance on the design and operation of PSA data systems or monitor compliance with best practice. It also failed to exert a strong challenge function on the selection of targets, the robustness of data systems or the reporting of achievements.

6. OFMDFM accepted that its oversight role was not sufficiently rigorous in the past. However it told the Committee that significant improvements have been introduced into the new PSA process. Key developments have included the issue of new guidance in June 2007, the development of new governance structures, the selection of more cross-cutting and strategic targets, the requirement to produce detailed Delivery Agreements for each target and the establishment of a central team to challenge reported performance assessments.

7. The Committee welcomes the improvements in oversight made since the C&AG’s examination, as these should contribute to an improved PSA process. However, it is clear to the Committee that the new system has not sufficiently addressed the specific data system limitations identified by the C&AG. These have led to real concerns about the reliability and accuracy of the data and make it difficult to conclude that reported performance was actually achieved.

8. OFMDFM’s Accounting Officer offered assurances to the Committee that, were the C&AG to revisit this topic, the PSA targets and systems of departments would “fare much better". He acknowledged that past results had been disappointing and said that the situation would improve incrementally. Ten years after the launch of PSAs, it appears therefore that much still remains to be done before any examination of targets and data systems will produce a clean bill of health.

9. The Committee expects OFMDFM, as part of its central oversight role, to hold individual departments to account for implementing improvements to weak data systems. At the moment, it is not clear that it has the capacity to do so. While the more recent involvement of the Performance and Efficiency Delivery Unit (PEDU) is welcome, it is focused on helping departments deliver targets rather than assessing the performance management framework. Given this gap, the Committee believes that there is a clear need for independent oversight of data systems to drive forward quality improvements in this area.

Recommendation 1

10. The lack of focus on data quality and on the robustness of measurement systems remains a concern. In the Committee’s view, this is an area in which OFMDFM must continue to seek improvement. However to provide independent assurance to the Assembly, the Committee recommends that OFMDFM and the C&AG jointly develop a work programme of PSA data system validation to assess the reliability and accuracy of the underlying systems and the reported data.

The involvement of senior managers and technical specialists in the

PSA process

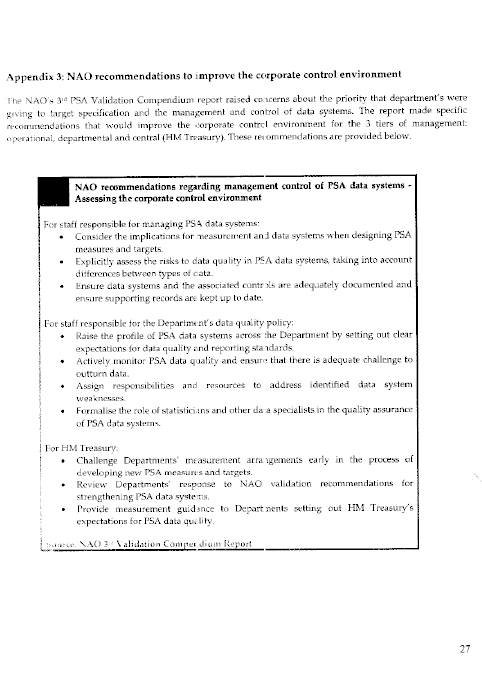

11. A strong corporate control environment is key to the establishment and operation of robust PSA data systems. Senior managers within individual departments are ultimately responsible for the quality of PSA data systems. They have a role in ensuring that risks to data quality are formally assessed and that appropriate quality controls are established.

12. The C&AG’s report noted that senior managers were not sufficiently involved in overseeing data quality. This is disappointing. Both DARD and DETI accepted the criticism but outlined improvements which have been introduced under the 2008-2011 Programme for Government. These include the appointment of Senior Responsible Officers for each PSA, supported by Data Quality Officers. In addition, DARD stated that it has commissioned an Internal Audit review to ensure its PSA data systems and reporting procedures are compliant with good practice.

13. Technical specialists, such as statisticians and economists, can also play an important quality control role. Their involvement can help departments to select meaningful targets, identify appropriate data sources and accurately report performance. All government departments have access to such expertise. The C&AG’s report noted, however, that the use of such specialists was often limited and, even where they were involved, their concerns surrounding data systems were not fully addressed.

14. During his evidence, the DETI Accounting Officer highlighted the specific actions his Department has taken in this regard. He has created an internal Strategic Planning, Economics and Statistics Division which has central responsibility for the development of PSAs and targets, the maintenance of the data systems, the validation of the data systems and the monitoring of performance. The Committee welcomes these developments and believes they should be considered by other departments.

Recommendation 2

15. All departments have access to a range of specialist staff. The Committee recommends that all departments use in-house resources such as statisticians and economists to improve their oversight of the quality of PSA data systems. This should ensure separation of duties between those responsible for service delivery and those responsible for monitoring and reporting performance.

16. Quality assessment of underlying data systems needs to be fully embedded in the PSA process. It is not clear from the evidence presented to the Committee whether systems are being risk assessed and controls put in place. If the quality of measurement systems is poor, the reported data will also be poor; this can lead to erroneous judgements. For this reason, there must always be full disclosure of known risks and data weaknesses.

Recommendation 3

17. Accounting Officers must take ownership of PSA data systems. The Committee recommends that departments’ Statements of Internal Control include a specific assurance that risks to data quality have been assessed and that appropriate controls have been put in place to mitigate these identified risks.

Vigorous Performance Reporting

Performance reports must be based on comprehensive, accurate and reliable information

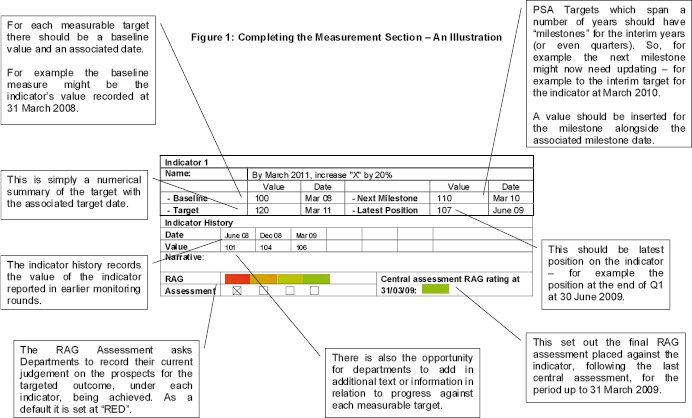

The need for performance reporting to be based on actual data

18. The introduction of PSAs formalised the process for reporting performance to the Assembly and the public on departmental achievement against key objectives. Departments should produce timely, transparent and comprehensive performance reports and make these publicly accessible. It is disappointing to note that the C&AG’s report identified a number of cases where reported performance was not substantiated by the underlying data. These included instances where performance was not compared against baselines, actual outturn and historical data were not provided and there was inadequate interpretation of results.

19. In response, departments cited the introduction of a new centralised reporting framework which they consider has improved the quality of PSA reporting. This takes the form of a Delivery Report which adopts a more detailed four point assessment scale.

20. The Committee recognises that the new report is more comprehensive and has benefited from PEDU and OFMDFM challenge. There is now a more detailed degree of analysis of results and this is welcomed. Nevertheless, the Delivery Report still represents a subjective assessment of performance. It does not present, for all targets, the underlying data supporting the assessment. For this reason, the Committee is of the view that the report, while an improvement, is still not fit for purpose. More than a decade after the introduction of PSAs, this is an unacceptable position.

Recommendation 4

21. It is of concern that the quality of reporting still falls short of best practice. The Committee recommends that, for each target, the Delivery Report should include the baseline position, interim outturn figures and the latest available data to ensure an objective and quantitative assessment of performance.

The evidence of contradictory assessments of performance

22. A key purpose of PSAs is to give reliable and robust performance assessments in which the general public and elected representatives can have confidence. Earlier this year, DFP submitted a performance report to its departmental Committee outlining the extent to which measures had been achieved. During questioning of departmental officials, it became apparent that the self-assessed claims were in fact not sustainable.

23. Furthermore, the departmental Committee identified that DFP was using a seven-point assessment tool which was completely different from the central team’s four-point scale. This clearly risks causing confusion to the Assembly and the general public. Departments currently have the independence and flexibility to adopt their own monitoring systems. However, given that the two reports came to such differing conclusions, it is clear that this represents a fundamental weakness in the performance assessment process.

Recommendation 5

24. It is alarming that the performance assessments undertaken by individual departments contradict those of the central Delivery Report and that they use different assessment scales. The Committee recommends that all assessments are based on objective information and that departments align their self-assessment scales to the four-point system used in the central Delivery Report.

25. As there are concerns about reported performance figures, the Committee welcomes DFP’s subsequent written assurance that senior civil servants’ bonuses were “not based on the data systems underpinning the delivery of PSAs". Until such data systems are robust, and produce reliable measurements of performance, achievement of PSA targets should not be used as a basis for awarding bonuses.

The high level nature of the Delivery Report

26. A further limitation of the new Delivery Report is its high level nature. OFMDFM indicated that the report is designed to inform the Executive and the Assembly of the general direction of travel, highlighting what is going right and what is going wrong. Although more detailed information is available in the individual Delivery Agreements, these are not currently published. This is not a tenable position. In the absence of the necessary detail, Assembly members can take no assurance on the validity and accuracy of high level self-assessments.

Recommendation 6

27. The summary nature of the Delivery Report limits transparency and scrutiny in the PSA process. The Committee recommends that progress on all targets contained in Delivery Agreements is reported every six months, made available on departmental websites and submitted to the relevant departmental Committees.

Gaps in annual performance reporting

28. The C&AG’s report highlighted that, during the previous Programme for Government, OFMDFM failed to publish a composite performance report for either 2006-07 or 2007-08. This is unacceptable as the absence of a composite report for this period prevented full and transparent assessment of performance by the Assembly and the public. Following the hearing, DETI provided written evidence of performance against its previous targets to the end of 2008. While this is welcomed, it highlights the lack of accountability which arose as a result of the non-publication of a composite report during this period. The Committee expects that, in future, achievement for all years and for all targets will be published centrally by OFMDFM.

Refining Procedures

It is important that departments develop more sophisticated procedures before selecting the next round of PSA targets

The need to adopt SMART targets

29. It is inherently difficult to set targets in the public sector. Unlike the private sector there is rarely a single measure, such as profitability, which adequately captures overall performance. Nevertheless, it is a well-established principle that, as far as possible, targets should be specific, measurable, attainable, realistic and timebound (SMART). Failure to adopt SMART targets can lead to difficulties in assessing overall performance and improving public service delivery.

30. The Committee was particularly disappointed that the major strategic targets relating to child poverty have been deficient in this respect. OFMDFM acknowledged that, at the time of the C&AG’s report, the targets were poorly drafted and the data required to measure improvement were not initially collected. When data became available, they were not statistically accurate, although OFMDFM claimed that they were sufficiently plausible to measure progress. Despite this assurance, there continue to be data system problems around this key policy area. There is no accepted definition of severe child poverty and currently there is no measurement system in place. In effect, OFMDFM is shooting at a target that it cannot see.

31. Problems were also evident with a number of other targets. For example, discussions with DARD highlighted that it was not always clear what was to be measured, some targets failed to get to the heart of business objectives while others tended to be overly complicated and, as a consequence, difficult to understand and interpret.

Recommendation 7

32. In the past, too many targets have been overly complicated and difficult to understand and monitor. Departments must ensure that each target is precisely stated and easily understood. Given past limitations in this respect, the Committee expects that all future PSA targets will be specific, measurable, attainable, realistic and timebound, and that, collectively, they cover the main aspects of departments’ key services.

The need for consistency of approach in selecting targets

33. The difficulty in selecting targets is further evidenced by the differing approaches adopted by DARD and DETI in relation to the use of Gross Value Added[1] (GVA). DARD has decided to dispense with this target arguing that, as an outcome measure, it is not within DARD’s ability to influence. Conversely, DETI has continued to use this measure as a target because it considers it a key indicator of long term economic development. While both these arguments may have their own merits, the difference in approach implies the need to refine the target selection process. There must be greater clarity on whether PSAs should only contain targets where departments can influence achievement.

Recommendation 8

34. Setting targets to measure public sector performance is not an exact science. However, there is a significant divergence in the approaches used by different departments. The Committee recommends that, for the next Programme for Government, OFMDFM takes the lead in developing a more sophisticated framework which introduces greater consistency in the use of output and outcome targets across all departments.

The need for targets not to be subject to unnecessary change

35. PSA targets are selected at the outset of each three-year spending cycle. They should be consistently stated and should not be subject to unnecessary change. Changing targets during the three-year life of a PSA undermines user confidence, as it can appear that departments are arbitrarily making changes to increase the likelihood of achievement. The Committee of Public Accounts at Westminster has been critical of frequent changes to targets as this weakens their ability to serve as useful and meaningful tools of accountability and retain credibility[2].

36. The C&AG’s report noted that one of DETI’s agencies, InvestNI, amended its target on the establishment and support of new businesses in disadvantaged areas. It was clear that these changes risked confusing readers and undermining the transparency of performance reporting. Following the session, DETI provided the Committee with a written submission containing details of the various changes to the target. In this, DETI stated that the revisions reflected changes in the reporting time period rather than in the target itself. This explanation does not offset the Committee’s concerns.

Recommendation 9

37. Changing targets during the life of the PSA risks undermining user confidence and accountability. The Committee recommends that such changes to targets are avoided but, if absolutely necessary, they must be explicitly disclosed and accompanied by a clear justification of the need for change.

[1] GVA measures the contribution to the economy of each individual producer, industry or sector in the United Kingdom.

[2] Committee of Public Accounts, Session 2006-07, Improving literacy and numeracy in schools (Northern Ireland) HC 108

Introduction

The Need for Robust Governance Arrangements

Vigorous Performance Reporting

Minutes of Proceedings

of the Committee

Relating to the Report

Thursday, 1 October 2009

Room 144, Parliament Buildings

Present: Mr Roy Beggs (Deputy Chairperson)

Mr John Dallat

Rt Hon Jeffrey Donaldson MP MLA

Mr David Hilditch

Mr Trevor Lunn

Mr Patsy McGlone

Mr Mitchel McLaughlin

Mr Jim Shannon

In Attendance: Ms Aoibhinn Treanor (Assembly Clerk)

Mr Phil Pateman (Assistant Assembly Clerk)

Miss Danielle Best (Clerical Supervisor)

Mr Darren Weir (Clerical Officer)

Apologies: Mr Paul Maskey (Chairperson)

Ms Dawn Purvis

Mr Jonathan Craig

The meeting opened at 2.03 pm in public session.

4. Briefing on NIAO report ‘Public Service Agreements – Measuring Performance’.

Mr Kieran Donnelly, C&AG, Mr Eddie Bradley, Director; Claire Dornan, Audit Manager; and Mr Joe Campbell, Audit Manager; briefed the Committee on the report.

The witnesses answered a number of questions put by members.

[EXTRACT]

Thursday, 8 October 2009

The Senate Chamber, Parliament Buildings

Present: Mr Paul Maskey (Chairperson)

Mr Roy Beggs (Deputy Chairperson)

Mr Jonathan Craig

Mr John Dallat

Rt Hon Jeffrey Donaldson MP MLA

Mr Trevor Lunn

Mr Patsy McGlone

Mr Mitchel McLaughlin

Ms Dawn Purvis

Mr Jim Shannon

In Attendance: Ms Aoibhinn Treanor (Assembly Clerk)

Mr Phil Pateman (Assistant Assembly Clerk)

Miss Danielle Best (Clerical Supervisor)

Mr Darren Weir (Clerical Officer)

Apologies: Mr David Hilditch

The meeting opened at 2.01 pm in public session.

3. Evidence on NIAO report ‘Public Service Agreements – Measuring Performance’.

The Committee took oral evidence on the above report from:

Mr John McMillen, Accounting Officer, Office of the First Minister and deputy First Minister (OFMDFM); and supporting officials Mr Damian Prince, OFMDFM; Mr Gerry Lavery, DARD; Mr David Sterling, DETI; and Mr Richard Pengelly, PEDU.

The witnesses answered a number of questions put by the Committee.

3.12 pm Mr Lunn left the meeting.

3.38 pm Mr McLaughlin and Mr McGlone left the meeting.

Members requested that the witnesses should provide additional information to the Clerk on some issues raised as a result of the evidence session.

[EXTRACT]

Thursday, 15 October 2009

Room 144, Parliament Buildings

Present: Mr Paul Maskey (Chairperson)

Mr Roy Beggs (Deputy Chairperson)

Mr John Dallat

Rt Hon Jeffrey Donaldson MP MLA

Mr David Hilditch

Mr Trevor Lunn

Mr Patsy McGlone

Mr Mitchel McLaughlin

Mr Jim Shannon

In Attendance: Ms Aoibhinn Treanor (Assembly Clerk)

Mr Phil Pateman (Assistant Assembly Clerk)

Miss Danielle Best (Clerical Supervisor)

Mr Darren Weir (Clerical Officer)

Apologies: Mr Jonathan Craig

Ms Dawn Purvis

The meeting opened at 2.03 pm in public session.

5. Issues Paper on evidence session on NIAO report ‘Public Service Agreements – Measuring Performance’.

Members considered an issues paper on this evidence session.

[EXTRACT]

Thursday, 5 November 2009

Room 144, Parliament Buildings

Present: Mr Paul Maskey (Chairperson)

Mr Roy Beggs (Deputy Chairperson)

Rt Hon Jeffrey Donaldson MP MLA

Mr David Hilditch

Mr Patsy McGlone

Mr Mitchel McLaughlin

Ms Dawn Purvis

Mr Jim Shannon

In Attendance: Ms Aoibhinn Treanor (Assembly Clerk)

Mr Phil Pateman (Assistant Assembly Clerk)

Miss Danielle Best (Clerical Supervisor)

Mr Darren Weir (Clerical Officer)

Apologies: Mr John Dallat

Mr Trevor Lunn

Mr Patsy McGlone

The meeting opened at 2.04 pm in public session.

8. Consideration of Draft Committee Report on Public Service Agreements – Measuring Performance

Agreed: Members ordered the report to be printed.

Agreed: Members agreed that the report would be embargoed until 00.01 am on Thursday, 26 November 2009.

Agreed: Members agreed to launch the report with a press release to be agreed at a later meeting and to a Committee launch at a relevant venue.

[EXTRACT]

Appendix 2

Minutes of Evidence

8 October 2009

Members present for all or part of the proceedings:

Mr Paul Maskey (Chairperson)

Mr Roy Beggs (Deputy Chairperson)

Mr Jonathan Craig

Mr John Dallat

Mr Jeffrey Donaldson

Mr Trevor Lunn

Mr Patsy McGlone

Mr Mitchel McLaughlin

Ms Dawn Purvis

Mr Jim Shannon

Witnesses:

Mr Gerry Lavery |

Department of Agriculture and Rural Development |

|

Mr John McMillen |

Office of the First Minister and deputy First Minister |

|

Mr David Sterling |

Department of Enterprise, Trade and Investment |

|

Mr Richard Pengelly |

Department of Finance and Personnel |

Also in Attendance:

Mr Kieran Donnelly |

Comptroller and Auditor General |

|

Ms Fiona Hamill |

Deputy Treasury Officer of Accounts |

1. The Chairperson (Mr P Maskey): Today, the Committee will address the matters raised in the Audit Office report ‘Public Service Agreements — Measuring Performance’. Mr John McMillen, accounting officer for the Office of the First Minister and deputy First Minister (OFMDFM), is here. You are very welcome.

2. Mr John McMillen (Office of the First Minister and deputy First Minister): I am joined by Damian Prince, head of the economic policy unit, who oversees the operation of the Programme for Government monitoring, and Richard Pengelly, public spending director and head of the performance and efficiency delivery unit (PEDU) in the Department of Finance and Personnel (DFP). His team helps OFMDFM in looking at departmental performance. I am also joined by Gerry Lavery, senior finance director of the Department of Agriculture and Rural Development, and by David Sterling, permanent secretary at the Department of Enterprise, Trade and Investment.

3. The Chairperson: I believe that this is your first time before the Public Accounts Committee. You have done well to escape us so far.

4. Mr McMillen: Please be gentle with us.

5. The Chairperson: We certainly will.

6. Paragraph 1.1 of the report outlines the important role of public service agreements (PSAs) in reporting performance, improving service delivery and promoting accountability. In retrospect, is your Department’s oversight role in that process sufficient?

7. Mr McMillen: Before I start to answer, I wish to put it on record that the Department welcomes the Northern Ireland Audit Office report. It is, and will remain, an excellent source of advice, best practice and guidance on this subject.

8. The report highlighted a number of weaknesses evident in the data and the procedures used to monitor the reporting of the 13 PSAs in 2006-07. As the Committee is aware, however, we have put in place revised procedures, protocols and delivery mechanisms to monitor and report the current Programme for Government. Although the design of those new arrangements preceded the publication of the Audit Office report, we were able to draw on the emerging findings of the audit team and the work carried out by the National Audit Office, and also on Treasury guidance. We have since issued guidance to Departments requiring them to produce delivery agreements. Those agreements aim to strengthen accountability and confidence in delivery and, where PSA outcomes cut across departmental boundaries, clearly explain the contributions of each Department.

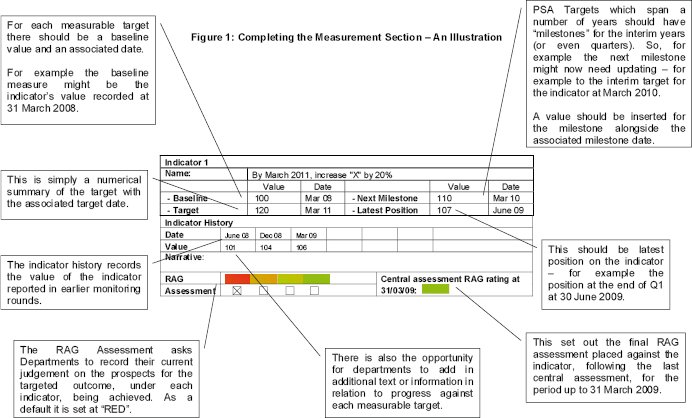

9. Importantly, the delivery agreements also require the production of a measurement annexe for each target, and it is that which deals with any data issues. I am not saying that we now have the procedure perfect. Indeed, the delivery report that was delivered to the Executive and Assembly in June 2009 still draws attention to some failings in the data supporting delivery of PSA targets. However, the fact that we are now aware of those shortcomings and reporting them — and, indeed, actively challenging them — demonstrates that we have taken on board the issues that are highlighted in the Comptroller and Auditor General’s report.

10. Returning to your question, Chairman, the findings of the Audit Office report were certainly disappointing. However, it was looking at the previous Programme for Government, and at particular complex data-set issues, which, in the main, relate to a minority of the PSA targets overall. Indeed, of the PSA delivery agreements for OFMDFM, complex data sets relate to about 25%.

11. Therefore, although the report is disappointing, I do not think the conclusion could be drawn that it reflected poor performance across all Departments. Indeed, the Audit Office said that that is not evidenced on those issues.

12. The Chairperson: Do you regard the Department’s oversight role as having been sufficient?

13. Mr McMillen: The previous technical notes were not as robust as what is now required in the delivery agreements and the guidance that followed. In the early part of the decade, we were still learning how to operate the system. It was evolving, as it did in Whitehall and other jurisdictions. We were learning, and we applied what appeared to be best practice at the time. Perhaps we did not apply it as rigorously as we should have. We have learned from that, and we now apply it rigorously.

14. The Chairperson: Have lessons been learned?

15. Mr McMillen: Yes.

16. The Chairperson: Paragraph 1.12 of the report states that a “radically different approach" to PSAs has been adopted and that this provides an assurance that the new data systems are “fit for purpose". Can you outline how that has been achieved?

17. Mr McMillen: We used a system that was based on a different approach to the PSA targets. Previously, targets tended to be departmental. We have now tried to embed the PSA targets in the strategic priorities for the Executive. They are much more cross-cutting.

18. The guidance requires public service delivery agreements for each of the PSAs, and for there to be a responsible Minister and senior responsible owner within the Departments. The agreements require the establishment of PSA delivery boards for each PSA, which are made up of senior officials from across the various Departments that contribute to that delivery. There is also guidance for the Department on how performance is to be measured, including a measurement annexe that includes issues around data sets and issues raised in the Audit Office report. There is a much more robust system of guidance for Departments to follow.

19. The key point is the challenge function that is applied at the centre by a team comprising officials from the economic policy unit in OFMDFM, PEDU and the Supply division in DFP. That team monitors performance on a quarterly basis and challenges Departments on how they are performing. That is ratcheting up the delivery mechanisms and making Departments more accountable.

20. The Chairperson: What is the time frame? Paragraph 1.12 refers to targets that were set in 2006-07. There was devolution in May 2007.

21. Mr McMillen: The main guidance which improved the situation was issued by DFP early in 2007 ahead of the Programme for Government development. The research was being done in 2006-07. That guidance has followed through into the formation of the Programme for Government. The monitoring system that was set up was also put forward at that stage, although it was not approved by the Executive until March 2009. However, Ministers, through DFP and the monitoring rounds, have been monitoring performance against the new guidance and the PSAs on a quarterly basis as part of the monitoring of the Budget.

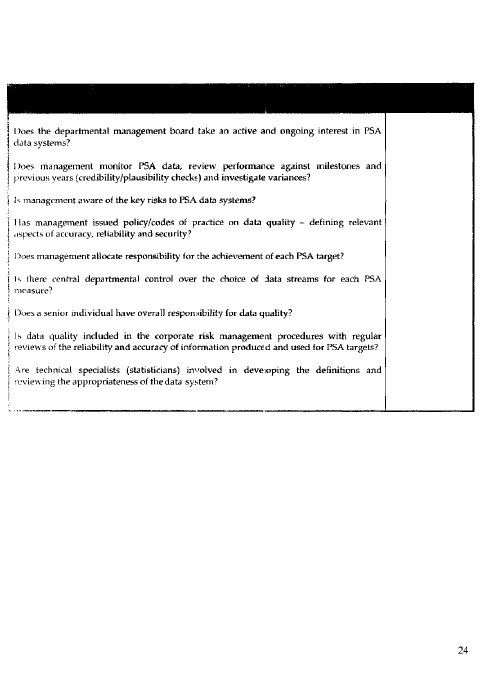

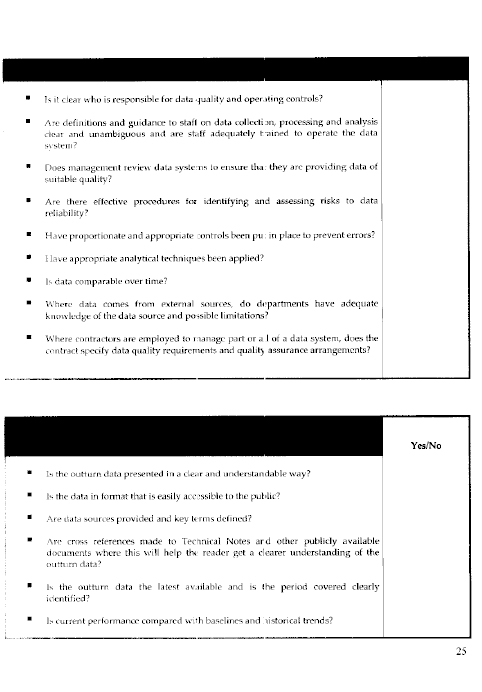

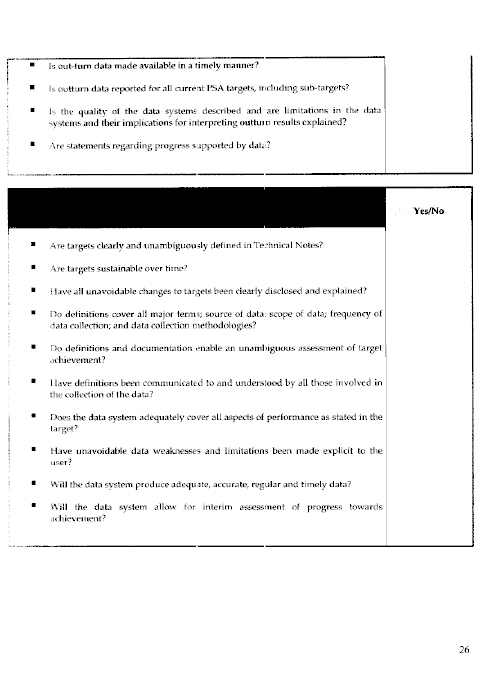

22. The Chairperson: Paragraph 1.11 of the report refers to a “good practice checklist" developed by the Audit Office. If the Audit Office revisits that area and tests a sample of the new PSA data systems using the checklist, will the resulting report tell us a story of significant improvement?

23. Mr McMillen: All Departments welcome the checklist. It is very useful to be able to assess how we are doing against a checklist. If the Audit Office looked at how the new system matches up against that checklist, we would fare much better. However, I think that the real value in the checklist will be in the next Programme for Government, because it gives very good advice on defining and setting targets and on how those targets are going to be measured. That will form part of the evolution of how we are improving performance management across the Government.

24. The Chairperson: Are you saying that if the Audit Office revisits that, no improvements will be seen until the next Programme for Government?

25. Mr McMillen: No, I think that the current Programme for Government monitoring processes are showing an improvement in how we are reporting. Evidence of that is contained in the view of the Confederation of British Industry (CBI), which, in response to the delivery report, observed that there was a big improvement on what has gone before. The CBI saw it as being transparent and open, and it welcomed that. The Assembly’s Research and Library Services has also seen the critique, and also welcomed it and said that it had showed a big improvement. Therefore, the current performance system is better than what was in place previously. There are still problems with it, but we have reported those to the Executive, and Departments are dealing with them in each monitoring round.

26. The Chairperson: The scale of some of the weaknesses in the data system led to some real concerns about the reliability and accuracy of the claims that were made about performance. The Committee would like your assurances that such data systems were not relied on when senior managers’ performance bonuses were being determined.

27. Mr McMillen: Senior managers’ bonuses are a matter for the permanent secretaries. I am sure that many factors play into those; however, I cannot assure the Committee one way or the other as to how performance on the delivery of Programme for Government targets feeds into such determinations. Data sets and their management would be a small part of the process, but I have no information on how that feeds into the determination of the bonuses.

28. The Chairperson: Would it be possible for you to go back to the Department and ask whether the Committee could get a look at some of that information?

29. Mr McMillen: I shall certainly see what I can do for you; however, there may be some difficulty in how it relates.

30. The Audit Office report noted that, given that the data sets themselves are not being managed, it is difficult to conclude that performance is being achieved. However, the report did not necessarily say that it is not being achieved. Therefore, that is a difficult issue. However, I shall take your point on board and see what I can find out for you.

31. The Chairperson: The Committee may end up drafting some written questions to the Department on that matter.

32. Mr Shannon: You and I meet regularly in Committees, so we are not strangers. My membership of the Committee for the Office of the First Minister and deputy First Minister means that I am aware of your role in OFMDFM.

33. It is clear that OFMDFM has a central co-ordination role, and I know that you and Assembly Members understand that function. However, it seems that you have not provided guidance on designing or operating PSA systems. In the absence of such guidance, how do you intend to ensure the quality of the data that is produced? That is what the issue is about; you must be able to report to the Assembly on those data so that it can see the targets and the performance.

34. Mr McMillen: DFP issued the guidance in March 2007, I believe. It was put together by a joint team from OFMDFM and DFP. It was issued by DFP ahead of the agreement on monitoring frameworks. The joint guidance is out, and it tells Departments how they should set up delivery agreements, how they should put their measurement annexes together, and how they should demonstrate that.

35. Mr Shannon: It seems that the data is not there. I understand that the purpose of the system is to make the Assembly, the media and other Departments aware of the data that is produced. If the data is not there and has not been there, is it not difficult to ascertain performance?

36. Mr McMillen: We need to make a distinction between the position in 2006-07, with which the Audit Office report deals, and what is extant today. The guidance was issued subsequent to the dates that are covered in the report, and the Programme for Government reporting system now monitors against that new guidance. The central team is made up of staff from the economic policy unit, PEDU and DFP supply. That team issues challenges against that guidance and takes data issues into account. I am on PSA delivery boards and, from my experience, I know that they work on data sets and data issues. Therefore, a mechanism is now in place to challenge the data issues.

37. Indeed, some targets were given red or amber status in the delivery report because of data issues, where the central team said that standards for data were not being reached, so it pushed the targets back to Departments for them to address for future monitoring rounds.

38. Mr Shannon: Are you telling us that the new system will overcome past difficulties?

39. Mr McMillen: I believe that those difficulties are being overcome and that that will continue; the situation will improve incrementally.

40. Mr Shannon: Will the same monitoring system be in place for reporting to the Assembly and all the Committees so that they will be aware of what is happening through the new system?

41. Mr McMillen: The First Minister and deputy First Minister will take the report to the Executive and the Assembly. I believe that a take-note debate on the first report, which covers 2008-09, is scheduled for some time in this session.

42. The Committees have also been receiving departmental responses. As you are aware, I was up in front of the OFMDFM Committee to talk about the performance of my Department.

43. Mr Shannon: Paragraph 2.9 of the report sets out the key components of technical notes. It states that they should: “set baselines; provide definitions of key terms; set out clearly how success will be assessed; describe the data sources that will be used; and outline any known and unavoidable significant weaknesses or limitations in the data system."

Those are the criteria. However, paragraph 2.10 refers to several deficiencies that were found when the Audit Office examined the technical notes. It seems that there were deficiencies in the technical notes provided by Departments in which at least one of those guidelines was not used. It seems very unusual, if not wrong, to have guidelines and then ignore them.

44. Mr McMillen: I accept the finding in the Audit Office’s report that the criteria were not being applied in 2006-07. The previous system lacked a challenge function at its centre to push the Departments back and examine what was coming through. We now have the central team who carry out that function. We have published measurement annexes for delivery agreements, which set out the criteria. The guidance requires very similar criteria to what was set out in paragraph 2.9 in the guidance for Departments to include in their measurement annexes. That is now being assessed by the central team. If something is not satisfactory, it will be reported back to Departments. In some instances, red or amber status is given to a target because it cannot be evidenced.

45. Mr Shannon: Paragraph 2.10 also states that:

“a number of the Technical Notes were factually inaccurate."

Will you assure the Committee that that will not happen again, and give us an explanation as to why it happened?

46. Mr McMillen: I do not have an explanation for individual Departments, but I assure the Committee that we now look at the accuracy of technical notes as part of our central monitoring. We also question baselines; if there are errors, we immediately go back to the Department to ask why.

47. Mr Shannon: The point that we are making is that it cannot be that difficult to have technical notes in place. We look forward to a vast improvement.

48. Paragraph 3.22 of the report refers to a delay — and again, no delivery report. The Public Accounts Committee has a role as the policeman of the Assembly and its Committees. We are concerned about the delay in publishing the 2006-07 report; performance for a large number of targets went unreported as a result. From the Programme for Government and Budget website, I see that the last old-style performance report was for 2005-06. Therefore, there is a gap between the old-style and the new-style reports for the year 2007-08. How can the Assembly assess the performances of Departments in 2007-08 if we do not have a report?

49. Mr McMillen: I accept that there were delays in getting the 2006-07 report published, which, as reported, were due to resource constraints. The Department is committed to producing a report on the Programme for Government as per the monitoring framework. As Members will be aware, we produced the first report for 2008-09 in June. We are gathering and recording information for the next report for general information within Departments, and we will be producing other reports every six months. We are committed to doing that.

50. Mr Damian Prince (Office of the First Minister and deputy First Minister): I can reassure members that, since the Executive approved the framework for monitoring the Programme for Government on 5 March, we have produced three reports on the performance of each Department. We produced an indicative report at the end of December, which was a dry run to ensure that we had the correct systems in place to properly monitor what was happening. We also produced a delivery report as at the end of March, which members will have seen, and which has been placed in the Assembly. We have also commissioned a report as at the end of June 2009, and that is a good way along.

51. Members can be assured that there is now a process in place. Even the delivery report at the end of March is not a full stop; it is a punctuation mark along the whole journey of reporting back on the Programme for Government. There will be regular update reports and regular challenges. The benefit of the new system is that it has changed the culture and the approach. The delivery of the Programme for Government is being driven, monitored and interrogated by the centre, rather than just by individual Departments.

52. Mr Shannon: I understand the explanation that you have given, but are you saying that there was no formal reporting of PSA performance for either 2006 or 2007? Has that been lost, or acted on, or are you saying that it is just a mistake from the past, but that you have now moved on and will get it right in the future? Is that what you are saying?

53. Mr Prince: My understanding is that, for the end of each of those years, performance was reported in the accounts of individual Departments and through their departmental boards. There was no composite report, as had been produced earlier. At that stage, in 2006-7, we were on the cusp of a new direction with a new Administration and the design of new systems. Most energy and effort went into getting the new system right and getting something nailed down to monitor the current Programme for Government. That is where the main energy and attention now centres.

54. Mr Shannon: Are you simply putting your hands up and saying that you got it wrong for those two years, but that you will get it right now? Is that it?

55. Mr McMillen: I would point out that that was prior to devolution. We have now produced a report for the first year of the new Programme for Government for 2008-2011, and intend to continue to produce reports for Committees every six months or eight months.

56. Mr Shannon: In relation to paragraph 3.21 — I think Dusty Bin was on ‘3-2-1’ a long time ago. That is probably showing my age. Paragraph 3.21 of the report, which deals with the introduction of PSAs, notes that, in order to comply with good practice, performance reports should:

“Include latest outturn figures, compare performance against baselines and provide historical trend data".

I do not see any of those three things in OFMDFM’s new-style delivery report, issued in June 2009, which the Committee has seen. Why does the new approach not follow basic best practice?

57. The Chairperson: I ask members and witnesses to speak up a bit, as the sound system is of very poor quality.

58. Mr McMillen: The delivery report to the Executive, and thus the Assembly, is not intended to be a detailed analysis of each individual target, but is rather designed to inform the Executive and the Assembly of the direction of travel, showing where we are doing well and highlighting areas that need further examination. It does not go into detail.

59. Mr Prince: The OFMDFM Committee also requested the detail that you are looking for. One member said that people do not feel the greens that are being highlighted in the reports. The delivery report is essentially a document to give the Executive a high-level overview of what is going right and what is going wrong, but it is built on a lower level of detail, which is contained in the delivery agreements. Those cover things like what the baseline is for each individual target, what progress has been made, what percentage of completion has been achieved, what milestones have been reached and what has been missed. The central delivery team reviews those reports and challenges Departments.

60. The red/amber/green (RAG) status is determined on the basis of those reports. When a report makes a claim that something has green status, we interrogate it further to look for the evidence that it should have that status. At that point we get down to the lower level of detail. If Departments are not at the point that they said they would be at, we will mark the rating down. That information is available not in the delivery report, but at the next level down, in the delivery agreements and the information that Departments submit to substantiate their RAG status.

61. Mr Shannon: The key issue for Committee members is how we can access figures if the three best practice outlines that we have in front of us have not been followed. If the figures are not made accessible through the best practice system, the Assembly is almost being misled about how that is done. I am sure that that is not your intention; perhaps you need to reassure us on that point.

62. Mr McMillen: I can reassure you that there is no desire to mislead anyone. It was a balance between overloading information and having a composite report that is acceptable, easily read and quickly digested. It may be that we have overdone that in some instances. We would welcome any feedback from the Committee on whether the level of information should be expanded. The report was welcomed by the CBI and others who considered it to be a big improvement and regarded it as being transparent, open and quickly accessible. I can appreciate some of the detail, and we can look at the possibility of that being accessed through the departmental delivery agreements on departmental websites.

63. Mr Dallat: Glossy reports such as this are about real people; people who are badly disadvantaged, children who are living in poverty, and so on. The Assembly depends on the data collected to influence the political change that is needed. Therefore, what we are discussing this afternoon is something that is really serious. Do you agree?

64. Mr McMillen: I do.

65. Mr Dallat: Public service agreement 13 is the only target selected, and it did not meet any of its criteria. Is that not an awful indictment? PSA 13 is about child poverty; the failure to achieve the target means that children who are suffering from deprivation and poverty will not have their needs addressed because the data is toxic or is being googled from the Internet and is not relevant to what is happening on the ground.

66. Mr McMillen: I certainly accept the point that real data is important in making policy decisions and driving forward. I do not necessarily make the connection that the row of Xs against PSA 13 leads to the conclusion that we are not making an improvement on that. In mitigation, PSA 13 is a good example of a target that was badly drafted at that time. It includes targets such as improving prospects and opportunities, which are very difficult things to measure and to get the data sets in place for. Many of the Xs are there because we could not evidence some of those issues.

67. The report refers to inaccuracies in the hard data on child poverty. A lot of that arose because of the system for measuring child poverty. We wanted to include data going back to 1998-99, but it was not collected until 2002-03. Therefore, we had to use other factors to work the data baseline back. As we were not able to make that data statistically accurate and to put intervals on it, from a statistician’s point of view, it was deemed to be a plausible estimate but not accepted as being statistically accurate. However, a Treasury assessment done in a different way came within 2% of our estimate. So we were working with figures that were plausible and could be taken forward to demonstrate improvement, but they were not statistically accurate.

68. Mr Dallat: Surely you should have been at the forefront of this initiative. What methods were you using that did not hit at all on the reality of child poverty?

69. Mr McMillen: We ended up with statistical evidence that set the baseline for child poverty, which was within 2% of an assessment carried out by the Treasury but was not statistically rigorous in terms of professional statisticians’ standards. However, our statisticians were happy that that was a plausible and good target for us to work off, and that was the baseline that we worked from.

70. Mr Dallat: Do you agree that child poverty is one of our single greatest injustices? The Good Friday/Belfast Agreement was signed 11 years ago, and a promise was made in section 75 of the Northern Ireland Act 1998 that there would be equality. You have had all the available resources at hand to produce statistics that would allow political decisions to be taken to address the difficulties faced by the most vulnerable people in society. Do you agree that you have failed in that regard?

71. Mr McMillen: I believe that we have produced baseline data that has allowed us to measure improvements in what we are doing to address child poverty. I accept that it has not met the required criteria, and we have put systems in place to try to improve on that. Child poverty is still a key target for the Department in the current PSAs.

72. Mr Dallat: Following on from that, I note from the 2008-09 delivery report that the delay in achievement against this target: “reflects outstanding decisions on a measurement for severe child poverty".

Is that not a classic example of putting the cart before the horse?

73. Mr McMillen: When Ministers were developing the Programme for Government there was a desire to do something about severe child poverty, but there was no accepted definition of what child poverty was, nor was there a way to measure it. Nevertheless, Ministers wished to do something about it, and it is perfectly right that politicians should be able to say what is important and to express aspirations. Statisticians in the Department have been examining how we can measure severe child poverty; they have presented a number of options to Ministers and are awaiting a decision.

74. Mr Dallat: Do you agree that a definition should have been established before you started measuring it? Otherwise, you do not have a clue what you are measuring.

75. Mr McMillen: That is an important factor, but it should not necessarily limit the wishes of politicians and Ministers in setting targets for issues. Targets can, sometimes, be aspirational, after which we can try to find systems for measuring them. Certainly, where definitions can be arrived at beforehand, that makes it much easier to measure performance.

76. Mr Dallat: Do you agree that you were shooting at a target that you could not see, because you did not know what the target was?

77. Mr McMillen: At that stage, yes.

78. Mr Dallat: That is an awful indictment, given the levels of inequality in Northern Ireland that affect children, and, particularly, children who live in child poverty as we speak. Let us hope, Chairperson, that something positive comes out of this sooner rather than later. Otherwise, this Committee is wasting its time.

79. Mr McMillen: There are two sets of targets. There are targets for child poverty, which have statistics and measures agreed with them. We are trying to reach agreement on a definition of, and measurements for, severe child poverty, for which Ministers set targets.

80. Mr Dallat: Do you agree that those were the targets that you should have been focused on — the ones for severe child poverty?

81. Mr McMillen: When we can put a definition on it. Everyone should be focused on the targets for severe child poverty.

82. Mr Beggs: The report sets out specific limitations in the old PSA targets for the Department of Agriculture and Rural Development (DARD), including a failure:

“to provide baseline figures or describe how a net increase in jobs would be calculated".

Incorrect baseline figures were provided in another instance, and, in a third case, it was found that:

“the data system used to determine baseline information was not subsequently used".

83. I will turn to the new system, and, in particular, PSA 4, ‘Supporting Rural Businesses’. One of your new targets is to:

“reduce by 25% the administration burden on farmers and agri-food businesses by 2013."

Can you outline the baseline position for that target and define each of the key terms in it?

84. Mr Lavery: Under PSA 4 we have committed to reduce the administrative burden created by DARD on the agrifood sector by 25%. To take that forward, we had an independent review of the current burden on the agrifood sector. That review reported on 30 June and has completed its public consultation period. On the basis of the responses to that consultation and our own review of it, we will make a Government response.

85. That review used an international standard methodology to measure the impact of red tape on business, and the Department expects that that methodology will be followed through to measure its performance.

86. Mr Beggs: Can you define what that target is? A target must be SMART (specific, measurable, attainable, realistic and timely) if it is going to work; it must be clearly measurable. What is the Department actually measuring?

87. Mr Lavery: The Department will measure the burden on a farmer of, for instance, having to read, absorb and comply with the guidance to complete the Integrated Administration and Control System form or the single farm payment scheme form; being required to accompany a veterinary officer in the course of a brucellosis or bovine TB test; and completing his claim form. All of those operations take time, and that time will be calculated in accordance with the standardised methodology and converted into a financial impact. Currently, the sum total of the financial impact has been calculated at £50 million per year, and the Department is striving to impact on that figure.

88. Mr Beggs: Are you confident that an objective rather than subjective measure — which could cause great variation in the figures produced — will be used?

89. Mr Lavery: Absolutely. The Department will have an independent assessment of its performance; we will not simply be assessing it internally and marking our own homework. The Department will submit its findings to an external reviewer.

90. Mr Beggs: OK. Moving on, the fundamental principle of PSAs is to measure performance against the key business objectives of a Department. Paragraph 2.19 of the report refers to a complicated target on the annual supply of timber using agreed sale lots. It is not clear whether that target gets to the heart of the efficiency and effectiveness of the Forest Service, which is one of the Department’s key business areas.

91. Do you accept that measuring wood output does not measure the efficiency or the effectiveness of the Forest Service, as the Department could sell more wood by dropping its prices, or move too much wood and become unsustainable. Do you agree that that is not a SMART target?

92. Mr Lavery: First of all, it is important to have a range of targets when undertaking performance measurement. The more targets a Department has, the better it is for the public and for managers who are trying to drive performance. In this —

93. Mr Beggs: What does that target tell the Department?

94. Mr Lavery: In that case, the output target for the Forest Service was purposely set at 400,000 cubic metres of timber per year. The performance was driven to achieve that level of output, and the Forest Service was successful in achieving that target throughout the PSA period.

95. That, in itself, is very important and significant, but the key message behind that target was to reassure the timber processing industry that, during that three-year period, the Department did not intend to have an increase or a diminution in supply. That was an important reassurance to an industry that is a substantial employer in rural areas.

96. Mr Beggs: What does that target tell the Department about the efficiency or the effectiveness of its business? Are there any commercial-style targets in that area? The setting of an output target does not provide that commercial information on its own.

97. Mr Lavery: In addition to that target there is a suite of targets contained in the Forest Service business plan each year, on which the Department reports. It is that accumulated position that allows the Department to measure the efficiency and effectiveness of the Forest Service. In the area of timber supply, the Department benchmarks its prices and performance against the rest of the UK and the Republic of Ireland, and it examines the out-turn prices that it achieves. Therefore, there is a suite of commercially-driven targets in addition to the output target.

98. Mr Beggs: Would it not have been better to include those targets in the Department’s PSA?

99. Mr Lavery: At the time, we were under a regime that invited Departments to specify 10 targets that encapsulated them. The Department of Agriculture and Rural Development is complex and wide-ranging, and we made the best selection that we could at the time in the knowledge that the Forest Service was publishing its annual performance publicly.

100. Mr Beggs: I will move to another target. Appendix 1 of the report states that PSA target 1 seeks to:

“Reduce the gap in agricultural Gross Value Added (GVA) per full time worker equivalent (measured as Annual Work Units) between NI and the UK as a whole by 0.6 of a percentage point per annum between 2003 and 2008, i.e. from 34% in 2003 to 31% in 2008."

Will you explain the meaning of “annual work unit"? Most people have an idea about GVA. However, that sentence is a very complicated construction, never mind how difficult it is to understand its meaning. What does it mean to the average man on the street who is meant to be reading these reports and assessing government actions?

101. Mr Lavery: That target was popular with the economists, if perhaps less popular with the man on the Clapham omnibus. However, the idea was to recognise the gap in productivity between Northern Ireland industry and the average productivity in the United Kingdom, and then to strive to reduce it. To do that, the Department has a suite of programmes that range from education and training to capital grants. We were trying to encapsulate the overall impact of those programmes, which, after all, must be intended to improve the competitiveness of our agriculture industry versus that in the rest of the United Kingdom and worldwide.

102. We had to try to get an overall measure. In that context, we had to produce a unit, which became the annual work unit. It is simply a way of finding a statistical unit that can be used as a unit cost or, in this case, a unit of performance. The industry has a wide range of working practices; there are full-time farmers, part-time farmers, farmer spouses and labourers. We reduced all those roles to a particular annual work unit, which is a statistic that can be used as an agriculture performance measurement that is acknowledged across the United Kingdom and that enables us to benchmark against the UK average.

103. Mr Beggs: Why was the targeted improvement so modest at 0·6% a year? Moreover, why did a 31% gap remain at the end of the period? If the target is so modest and the outcome so minimal, is all the effort to record the figures worthwhile?

104. Mr Lavery: A 3% improvement in productivity compared with the United Kingdom average would have equated to around a 10% improvement in our relative position. That would have been significant. However, the difficulty with this target, as the report makes clear, is that gross value added is a good way to measure the health of a sector or the health of a region. It is not a good way to measure the impact of our performance. We have discontinued its use to measure performance, because gross value added encapsulates many factors, most of which are outwith our control, including the exchange rate and movement in prices, which, unfortunately for our industry, is happening in both directions at the moment.

105. Mr Beggs: Have you set this as another target that has not been a SMART target? It must be borne in mind that smart targets are a fundamental thing taught to anyone in business. Do you accept that this is the third area where you have not had a SMART target?

106. Mr Lavery: At that time, the focus was on outcome rather than output. SMART targets are a very good way of measuring the output of an area. I could specify that during the course of 2008-09 I would train 1,500 people and that they would arrive at a certain level of qualification. That is a SMART target; it measures the output. Our focus at that time was very much on outcome, and what that would mean to the economy. That target was designed to try to convey an overall economic out-turn.

107. Mr Donaldson: I declare an interest as a former Minister in OFMDFM, though not during the period covered by the report. Paragraph 2.3 notes that senior managers in Departments are ultimately responsible for the quality of PSA data systems and so on, yet paragraph 2.4 shows that the departmental management boards appear not to have taken an interest in PSA quality control and data accuracy — and that is not exclusive to your Department by any means. What systems and controls does DARD have in place to enforce that quality assurance function?

108. Mr Lavery: First, we have sought to comply with the guidance that OFMDFM has helpfully issued. Therefore, I chair a PSA delivery board, and that board meets quarterly. Each quarter we receive a progress report on our PSA targets, and we review those targets and look at any data quality issues that may present. The six-monthly reviews go to the Minister and the Committee for Agriculture and Rural Development, and we take care that they are also seen by our entire board, including the independent members. Each PSA now has a senior responsible officer (SRO) who is a member of the Senior Civil Service, and those officers are supported by data quality officers. Data quality officers are under absolutely no illusion that they are responsible for precisely that: the quality of the data that goes in to support the target, and so forth. That is the system.

109. Mr Donaldson: Yes; that is the system, but the evidence presented in the report indicates that, although management boards took an interest in ensuring that targets were in place and monitoring progress towards the achievement of those targets, when it comes to the PSA data systems — the data accuracy and quality control — there was no evidence that Departments had put in place formal guidance or policies specifically in relation to the standards expected. Although you have lines of management, which is good, have you now put in place those systems? Have you now got policies and formal guidance specifically designed to establish whether the PSA data systems, quality control and data accuracy are working for you?

110. Mr Lavery: I can offer you some reassurance. First, we have learned lessons from the Audit Office report. Secondly, we have ensured that there is a delivery board in the new PSA framework, which I chair. Because the delivery board is focusing on PSA performance, it has the time and the focus to drill down into the PSAs. Perhaps the departmental management board would not have the time to go that far in its meetings. Having a separate board to do that work will, obviously, improve the focus.

111. The third reassurance is that the Committee for Agriculture and Rural Development, I am delighted to say, takes a keen interest in the performance of the Department, and specifically our reporting of the performance on PSA targets. With that degree of invigilation, interrogation and the stimulating enjoyment of debate, I am satisfied that no stone will be left unturned.

112. Mr Donaldson: I am afraid that we are not, Mr Lavery. The problem is that I am not hearing that you have put in place formal guidance and policies to deal with this. It is all very well being able to drill down, but if you do not know what you is looking for — the standards that are required for quality assurance, data accuracy and quality control — how are you able to assess whether the people who are doing the drilling are doing their job and getting to the core issues?

113. Mr Beggs referred to your targets, and you gave an explanation for them. Perhaps it might assist if you were able to more clearly establish whether the whole system is delivering in relation to data control. I am anxious to hear whether you have put in place formal guidance or policies.

114. Mr Lavery: In that case, there are two further reassurances. First, the good practice checklist, which is contained in the annexe to the Audit Office report, was sent to all of our SROs and our data quality officers. To that extend, people know the standard; they know what they are trying to do.

115. Secondly, we have commissioned our internal auditors to carry out an in-depth review, specifically to establish whether DARD PSA data systems and reporting procedures are compliant with the good practice checklist. That has been commissioned, and it will report at the end of October. Thirdly, the guidance that was provided by OFMDFM in 2007 has been closely followed by DARD.

116. Mr Donaldson: It is good that we have guidance. Are you able to provide the Committee with a copy of the policies that you have to monitor the progress that is being made, particularly in relation to the standards that are expected from PSA data systems?

117. Mr Lavery: I am happy to do so.

118. Mr Donaldson: Although the report identified several weaknesses in the data systems that were used by your Department to support PSA targets in 2006-07, paragraph 1.12 outlines assurances from OFMDFM that things have improved significantly, and we heard the evidence from Mr McMillen about that. Can you explain to the Committee exactly how you have ensured that the data systems that support more recent PSA targets for your Department are robust?

119. Mr Lavery: All of the PSA targets were developed in the context of guidance that was set out by OFMDFM and circulated in the Department. They have all been the subject of reporting on progress to date. They have also benefited from the draft and final reports of the Audit Office in this investigation being circulated to the business areas named in the report, and from the good practice checklist that was circulated to all of the SROs and the data quality officers. Following the completion of the process, the memorandum of reply and the Public Accounts Committee’s conclusions will be circulated in the Department and specific lessons will be learned.

120. Mr Donaldson: Paragraphs 2.14-2.17 outline the need to involve professionals in the PSA process. Therefore, in reference to your new PSA, can you explain the extent to which your professional economists or statisticians were involved in designing your targets? What did your Department do as a result of its advice to ensure that each data system was fit for purpose? Going back to the point that was made by Mr Beggs, if you are falling well below a PSA target, do you involve your economists and statisticians to assess why that is the case and whether the target is robust?